Background

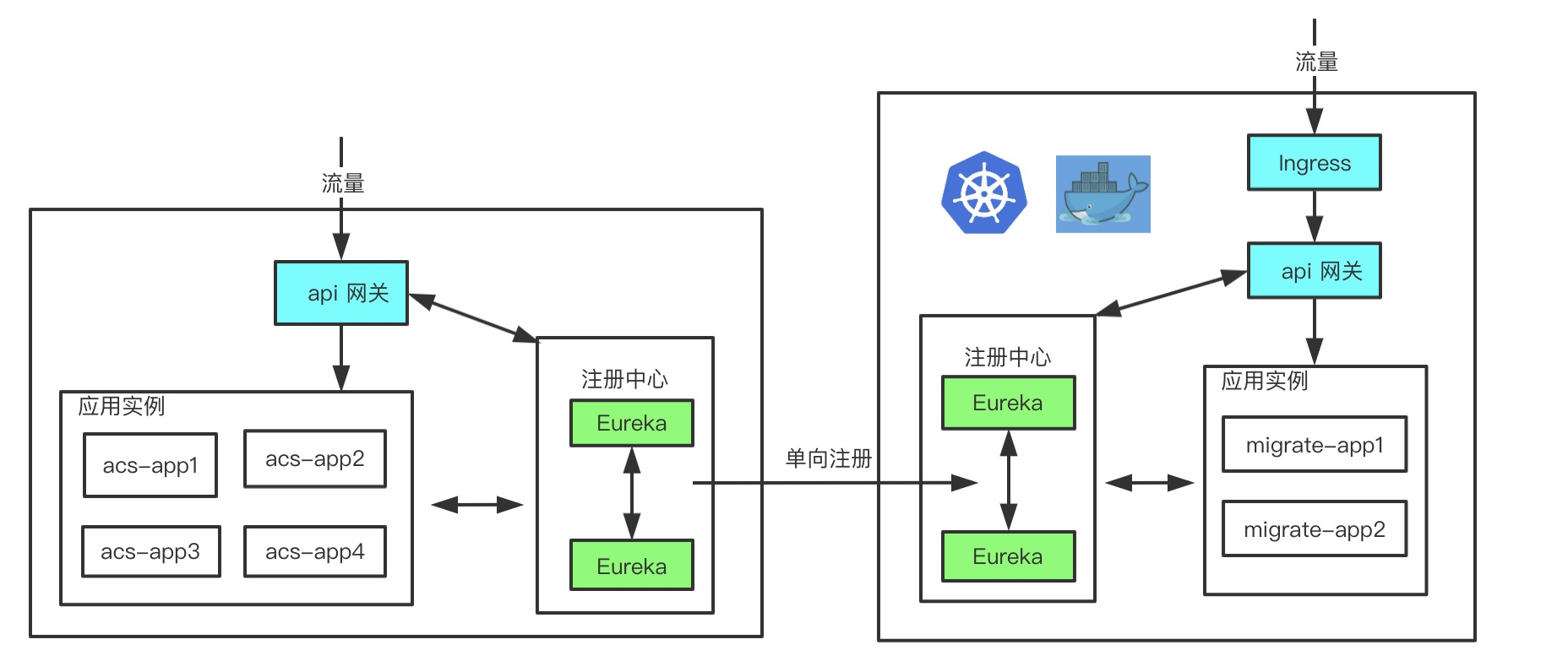

Recently, I was responsible for migrating our Spring Cloud microservices running on AliCloud ECS to k8s. To ensure smoothness, we still need to keep the Eureka system in k8s, and will not consider removing Eureka until all services are running in k8s.

The implementation process can be roughly divided into two phases: pilot and full-scale rollout.

- In the pilot phase, some independent peripheral services are migrated to k8s to observe the operation. Select some of the machines as Worker Nodes to be managed by k8s and the rest to be unmanaged by k8s. By default, the k8s Pod network to the ECS network is unidirectional, and the unmanaged portion of the ECS cannot access the k8s Pod segment directly via ip.

- In the full rollout phase, all hosts are managed by k8s, and the k8s network to the ECS network is bi-pass.

For the pilot phase where the network is unidirectional, you can deploy a separate set of Eureka clusters in k8s and register the ECS Eureka clusters to the k8s Eureka clusters unidirectionally, ensuring that k8s instances can access ECS instances. Since we only chose to deploy a standalone perimeter service during the pilot phase, we can tolerate ECS instances not being able to access k8s instances.

Once the pilot is complete, all ECS nodes can be brought under k8s management as Worker Nodes, at which point the ECS network and k8s Pod network interoperate and Eureka can be switched to bi-directional registration. Once all migrations are complete, the original ECS Eureka cluster can be taken offline.

The dual Eureka cluster approach not only ensures a smooth migration, but also enables relative isolation of both applications during the pilot period.

If you like to tinker, you can configure the ip table manually or by script to ensure that the unmanaged portion of the ECS can access the k8s Pod segment directly via ip. This solution enables bi-directional registration early on and can be seamlessly integrated with the full rollout phase.

The following example describes the configuration design of an ECS cluster for uni-directional registration of k8s clusters and how to resolve any issues that may be encountered.

Eureka configuration design

First add the following DNS resolution rules to the /etc/hosts file.

The following yaml configuration shows how to simulate the one-way registration of an ECS Eureka cluster to a k8s cluster, with a few configuration notes.

eureka.server.enable-self-preservation=false: Disabled the Eureka-self-preservation-renewal feature of the k8s Eureka cluster (for reasons explained below).eureka.client.fetch-registry=false: Disables application list fetching for ECS Eureka startup. If enabled, ECS Eureka startup will fetch the application list from k8s Eureka server and k8s instances will register to ECS Eureka, which will cause ECS instance access errors due to network failure.

|

|

Make sure you are already in the Eureka server project directory, open 4 terminals and start all Eureka in turn with the following command.

|

|

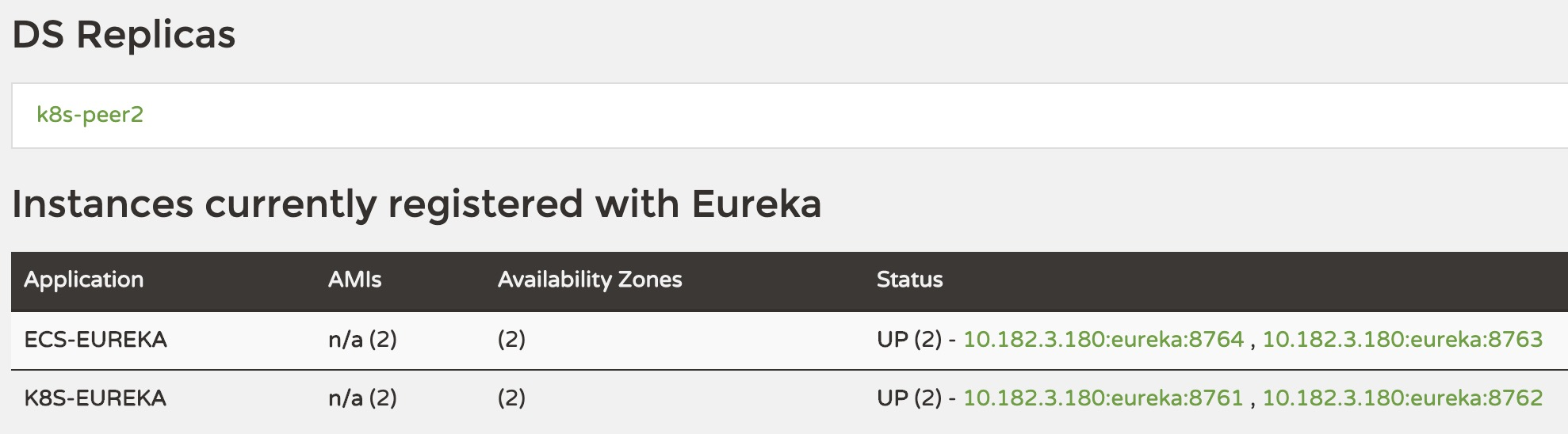

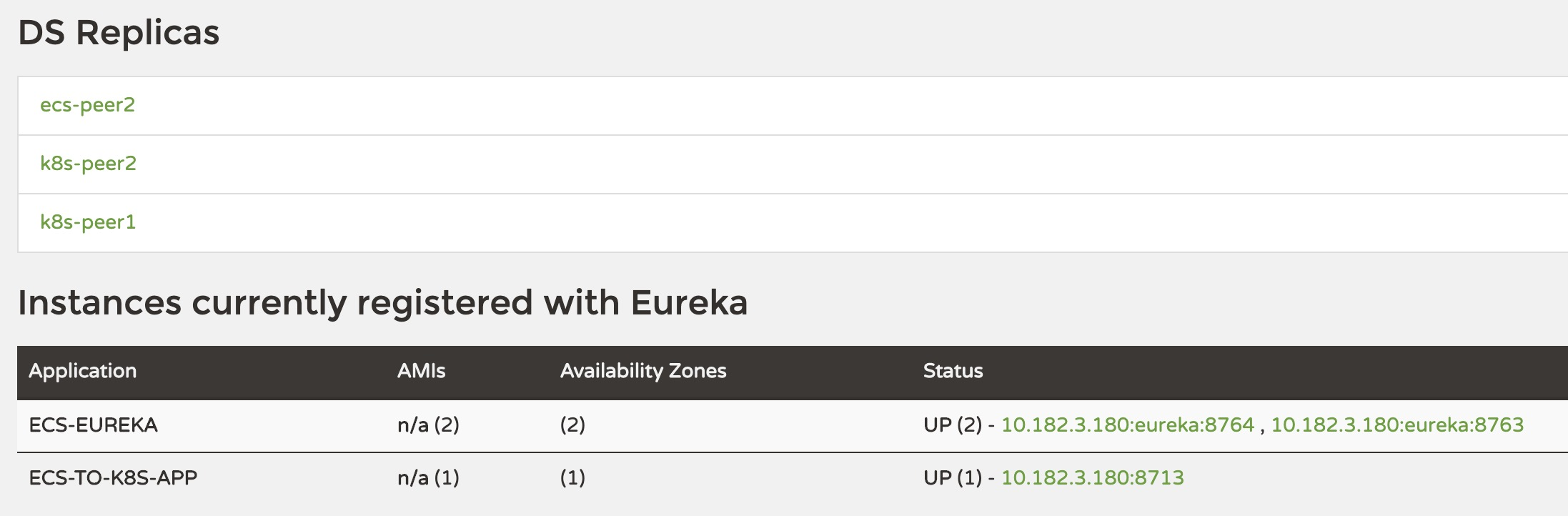

Visit k8s-peer1:8761 or k8s-peer2:8762 to see the Eureka instances ecs-peer1:8763 and ecs-peer2:8764 registered to the k8s-zone.

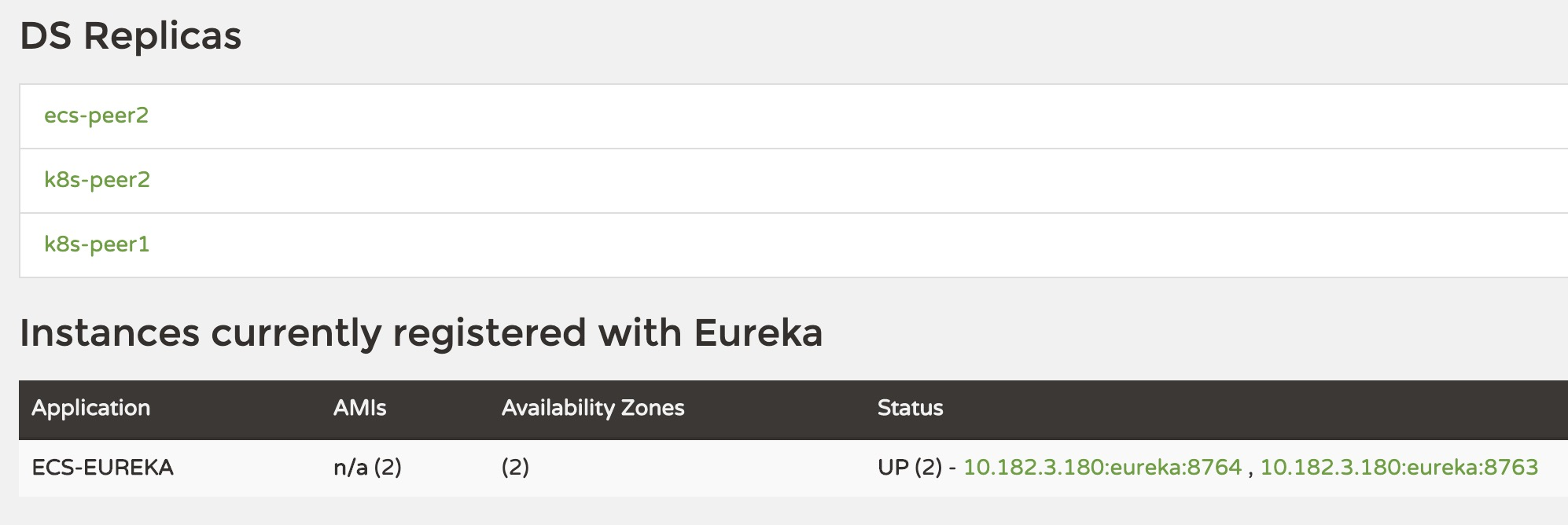

Visit ecs-peer1:8763 or ecs-peer2:8764, Eureka is not registered to the ecs-zone.

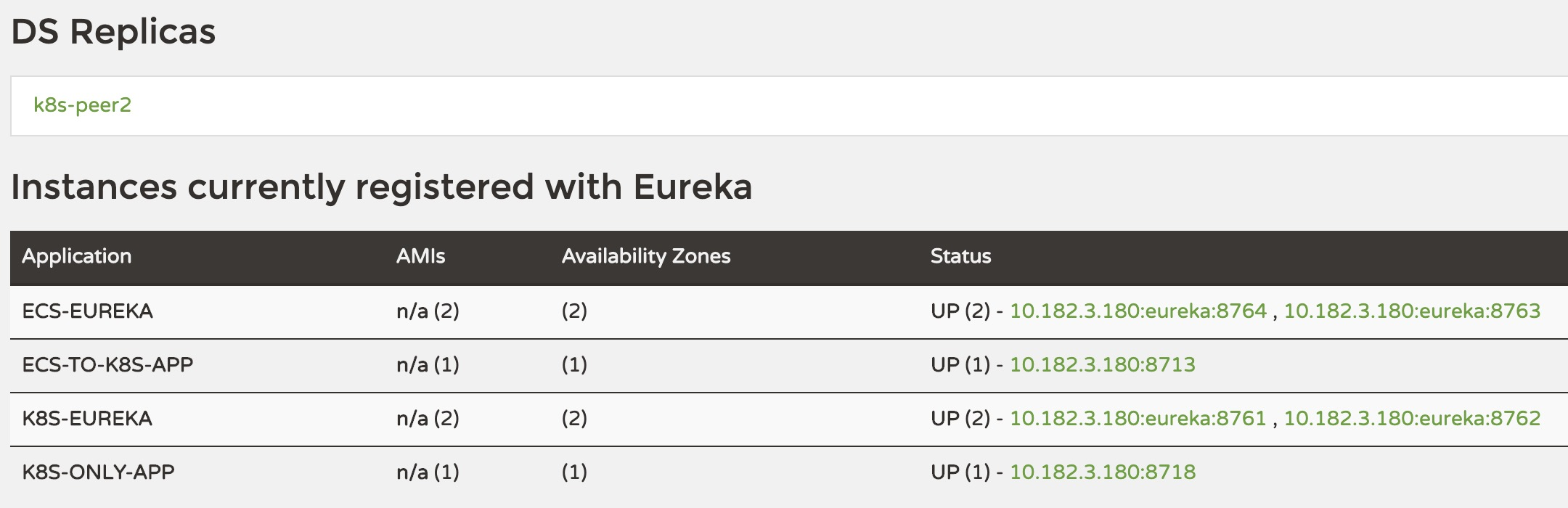

Then register the application ecs-to-k8s-app via ecs-peer1:8763 and k8s-only-app via k8s-peer1:8761, and find that the ecs-to-k8s-app registration information has been synced to k8s-zone, but the k8s-only-app registration information has not been synced to ecs -zone.

If you enter the full rollout phase and plan to switch to two-way registration, similarly, simply change the k8s Eureka instance configuration to the following.

|

|

Understanding Eureka cluster replication principles

Eureka picks all nodes declared by eureka.client.availability-zones as a list of synchronization nodes (above, I implemented one-way registration in this way).

The Eureka cluster replication code is in package com.netflix.eureka.registry, class PeerAwareInstanceRegistryImpl, with instance registration as an example.

|

|

The Eureka server instance handles both application registration (isReplication=false) and peer registration synchronization (isReplication=true) via register. In the case of application registration, PeerAwareInstanceRegistryImpl synchronizes the registration information to other nodes in the cluster after a successful local registration; in the case of registration synchronization for other nodes, the process ends after a successful local registration.

The reason for this design is that if replication information is allowed to be passed repeatedly, the NICs of all Eureka nodes will be full after a while if any application is registered.

Therefore, in cross-cluster replication, do not try to place multiple nodes at the same domain and then pass registration information through the unified domain; be sure to declare all nodes that need to be synchronized one by one in the configuration.

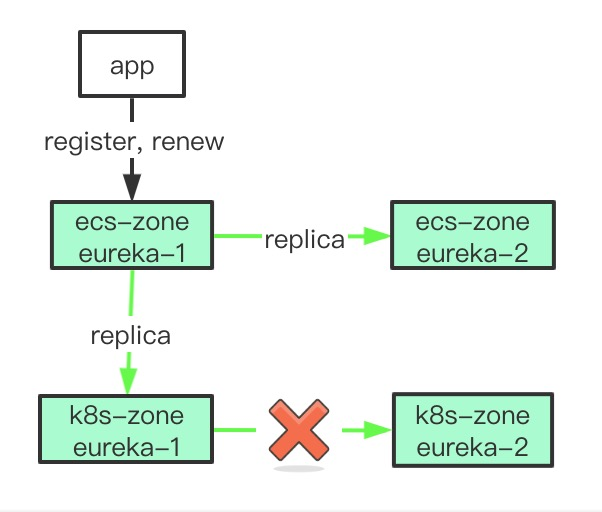

In the above diagram, the app information is updated to eureka-1 in the ecs-zone, which is subsequently copied to eureka-2 in the ecs-zone and eureka-1 in the k8s-zone, but not to eureka-2 in the k8s-zone, which will eventually result in a particular Eureka instance in the k8s-zone that lacks a large number of registered applications.

Eureka cluster in k8s

Any Eureka node needs to know the addresses of other nodes and communicate with them through fixed addresses, so the appropriate deployment for Eureka cluster in k8s is StatefuleSet. in StatefulSet yaml, you just need to replace the fixed IP with a fixed domain name, so that part of the configuration is omitted here.

It is worth noting that multiple Services are used here to expose the underlying Eureka instances for the reasons written in the annotations, the StatefulSet needs to generate the node domain name based on the Service declaration, if the StatefulSet is named eureka, then the node fixed domain name is eureka-0.eureka-svc, eureka-1.eureka-svc, …, eureka-n.eureka-svc.

As mentioned above, the information synchronization between Eureka nodes cannot unify the domain load, so you cannot simply put an Ingress on top of eureka-svc to expose to the ECS Eureka registry, but need to pick Eureka instances one by one through a separate Service (combined with a StatefulSet label) and then expose them one by one through NodePort or Ingress.

|

|

At this point, you should already understand how to synchronize k8s Eureka with external Eureka information.

Why turn off Eureka self-preservation

The Eureka-self-preservation-renewal feature, designed to disable the evict mechanism when a network partition occurs, no longer eliminates service instances, is available here in a good introductory article.

By default, self-protection is turned on if Eureka does not receive renewal information for more than 85% of the instances.

Early in the migration, the majority of instances in the k8s Eureka cluster are from the ECS cluster. A little network jitter, or an ECS Eureka reboot, triggers k8s Eureka self-protection. The result is that k8s Eureka has a large number of expired instances, with very poor consistency and frequent service call failures. Therefore, I have turned off self-protection in practice.

Reference:

https://www.zeng.dev/post/20200428-eureka-multil-cluster-replica/